I’ve been playing a lot with node lately thanks to this PeepCode screencast and since Heroku released their new Celadon Cedar stack I’ve been wanting to benchmark ruby versus node.js for a restful API which I need to build.

At first I tried to compare bare node.js against eventmachine_httpserver. It did quickly became obvious that this kind of micro-benchmark wasn’t going to be very helpful in deciding which one to choose.

Building the API was going to be messy unless I did start using something like sinatra or express.

Methodology

I did setup 2 similar repositories on github:

https://github.com/JosephHalter/heroku_ruby_benchThis repository is using sinatra, mongo_mapper, yajl and unicorn.

https://github.com/JosephHalter/heroku_node_benchThis repository is using express, mongoose and cluster.

I deployed both on Heroku, did setup a free mongohq account for both and run a few tests with various number of workers. All tests have been done from a datacenter in Paris using the following command:

ab -k -t 10 -c 1000 http://evening-robot-961.herokuapp.com/restaurant/4df550c5c3aaaa0100000001

ab -k -t 10 -c 1000 http://cold-mist-128.herokuapp.com/restaurant/4df55a0fab9e270007000001

evening-robot-961 is running on node 0.4.7 and cold-mist-128 is running on ruby 1.9.2

Checklist

For those asking:

- yes, I’ve waited enough time between each heroku scale

- yes, I’ve retried each test a crazy number of times

Heating

Here are the raw results for a concurrency of 100, just to ensure everything works as expected before starting the real test:

| completed | failed |

express/node | 3358 | 0 |

express/cluser/3 workers | 7473 | 0 |

sinatra/thin | 1649 | 0 |

unicorn/4 workers | 6080 | 0 |Results

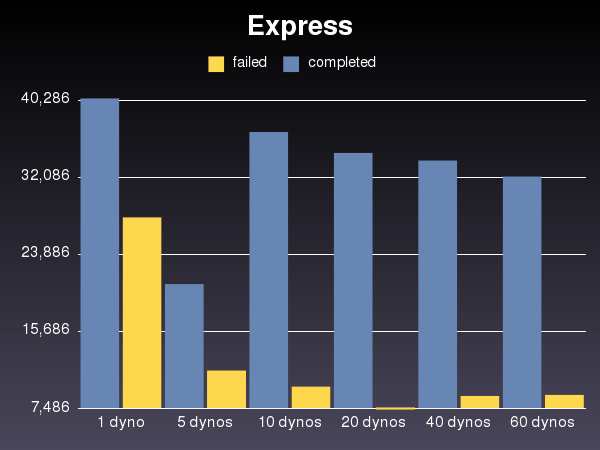

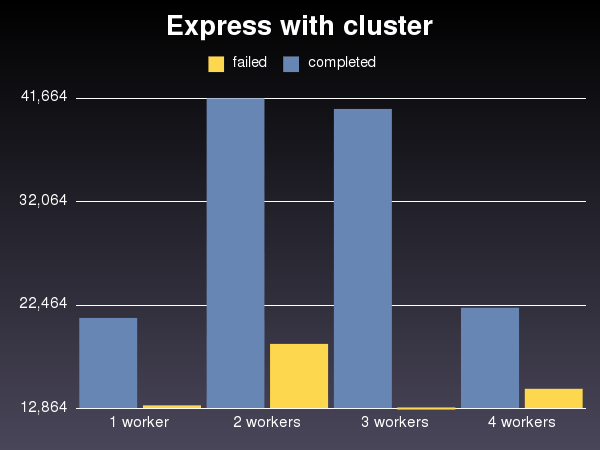

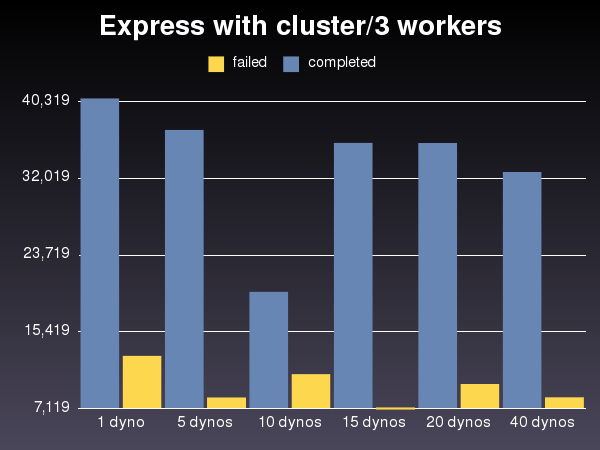

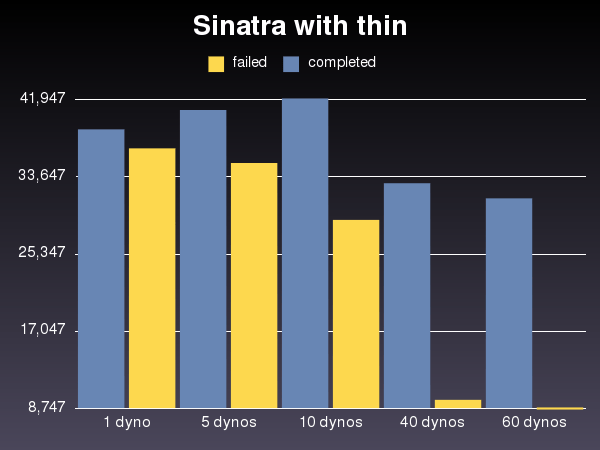

Here are the raw results for a concurrency of 1000:

| completed | failed |

node/1 dyno | 40524 | 27865 |

node/5 dynos | 20755 | 11575 |

node/10 dynos | 36953 | 9866 |

node/20 dynos | 34724 | 7486 |

node/40 dynos | 33919 | 8863 |

node/60 dynos | 32218 | 8984 |

cluster/1 worker | 21307 | 13193 |

cluster/2 workers | 41679 | 18904 |

cluster/3 workers | 40700 | 12864 |

cluster/3 workers/5 dynos | 37292 | 8360 |

cluster/3 workers/10 dynos | 19787 | 10870 |

cluster/3 workers/15 dynos | 35894 | 7119 |

cluster/3 workers/20 dynos | 35871 | 9807 |

cluster/3 workers/40 dynos | 32727 | 8371 |

cluster/4 workers | 22262 | 14738 |

thin/1 dyno | 38813 | 36769 |

thin/5 dynos | 40885 | 35178 |

thin/10 dynos | 42141 | 29082 |

thin/40 dynos | 33014 | 9732 |

thin/60 dynos | 31392 | 8747 |

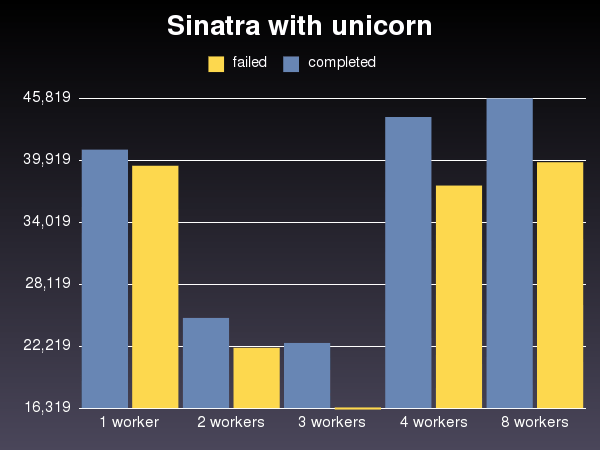

unicorn/1 worker | 41032 | 39498 |

unicorn/2 workers | 24991 | 22152 |

unicorn/3 workers | 22601 | 16319 |

unicorn/3 workers/5 dynos | 20386 | 11012 |

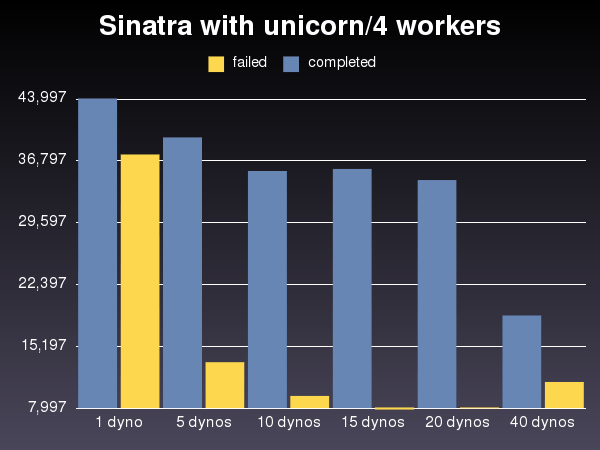

unicorn/4 workers | 44127 | 37607 |

unicorn/4 workers/5 dynos | 39591 | 13426 |

unicorn/4 workers/10 dynos | 35672 | 9511 |

unicorn/4 workers/15 dynos | 35925 | 7997 |

unicorn/4 workers/20 dynos | 34611 | 8131 |

unicorn/4 workers/40 dynos | 18873 | 11125 |

unicorn/8 workers | 45904 | 39819 |A few charts to make it easier to read:

Conclusions

Using either ruby or node I could easily get more than 2000req/s. I think both are viable alternatives. With only 1 dyno however, you’ll start to have failed connection when faced with massive concurrency because the backlog is full. Increasing the number dynos doesn’t magically allow you to handle more requests per second, however it can decrease the number of failed connections. Heroku pricing is linear to the number of dynos you scale to, however your throughput does only only improve marginally. It could be that I’m benchmarking from only 1 server but I’ve seen almost no difference between having 40 dynos or 60. You’ll see one when you receive the bill so be cautious, especially if you use an auto-scale tool.

Apart from that, we’re seeing a huge improvement when using unicorn with 4 workers instead of thin which was the only possibility before Celadon Cedar so a big thank you to Heroku who did make this possible. The same applies to node with cluster, you can do more with 15 dynos running cluster with 3 workers than with 60 dynos of node alone (for a quarter of the price!). Special thanks to TJ Holowaychuk who did help us to fixing a stupid issue which prevented us from using cluster on Heroku.